Our Technology & Artificial Intelligence Briefing, provides an overview of recent developments in EU Artificial Intelligence Regulation, including:

A. Introduction

B. Timeline

C. Implications for Companies (Extraterritorial Reach)

D. Risk-Based Approach

E. General Purpose AI (GPAI)

F. Transparency Requirements

G. Measures in Support of Innovation

H. New EU Regulators Matrix

I. Penalties

J. Next Steps

A. Introduction

1. The Council of the European Union approved the long-awaited Regulation on Artificial Intelligence (AI Act) on 21 May 2024.

2. The AI Act reflects a landmark legislative initiative worldwide. Through its adoption, the EU attempts to strike a balance between the acceleration of innovation in the field of AI and the safeguarding of the trustworthiness and accountability of AI systems within the EU.

3. The AI Act will be soon published in the EU's Official Journal, upon its signing by the presidents of the European Parliament and of the Council. 20 days after its official publication, the AI Act will enter into force.

4. Due to its staggered implementation, it is important for companies to understand which actions they need to take to ensure compliance before the upcoming deadlines. This Briefing aims to provide an overview of the applicable timeline and some important highlights for companies.

5. For a more detailed analysis of content of the AI Act, please refer to our previous Briefing here.

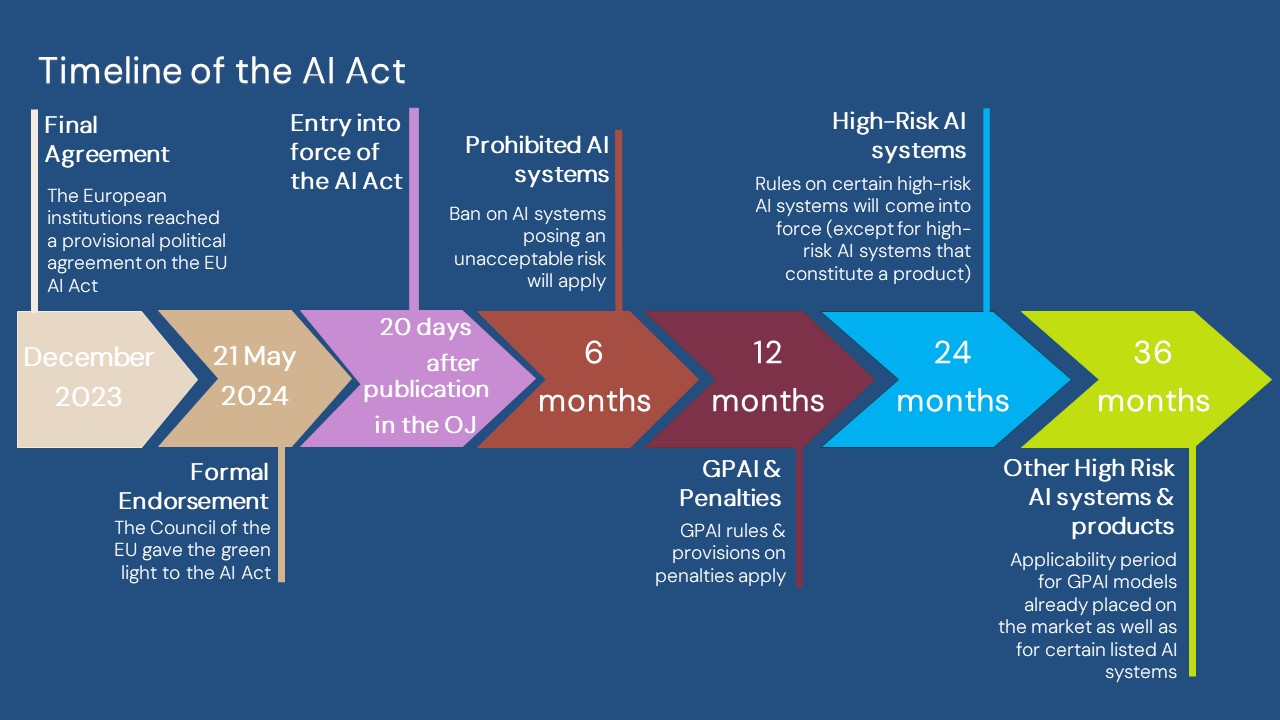

B. Timeline

C. Implications for Companies (Extraterritorial Reach)

1. A set of obligations is imposed to operators across the AI value chain, namely:

a. providers placing on the market or putting into service the AI systems or General Purpose AI 1 in the EU or whose output is used within the EU, whether inside or outside the EU;

b. deployers that have their place of establishment or are located within the EU;

c. product manufacturers placing on the market or putting into service an AI system together with their product and under their own name or trademark;

d. importers and distributors of AI systems within the EU;

e. authorized representatives located or established in the EU that are contractually obliged to perform and carry out the obligations and procedures laid down in the AI Act on behalf of an AI system and/or General Purpose AI provider.

2. As with the General Data Protection Regulation, the AI Act has an extraterritorial reach, ie its provisions shall bind providers of AI systems and/or General Purpose AI, irrespective of their place of establishment or location, so long as the output produced by the AI system is intended for use in the EU.

D. Risk-Based Approach

1. A risk-based approach is adopted whereby AI systems are classified based on the level of risk incurred. Subsequently, a different set of obligations applies per AI systems’ risk level, and in particular:

a. unacceptable risk (eg social scoring, AI emotion recognition systems in the workplace) - absolute prohibition;

b. high-risk (eg, biometric identification) - authorized subject to specific requirements, obligations and an ex-ante conformity assessment as well as to oversight by competent authorities;

c. limited risk (eg chatbots) - additional transparency obligations;

d. minimal or no risk (eg spam filters) - general obligations. The voluntary adoption of codes of conduct is encouraged.

E. General Purpose AI (GPAI)

1. GPAI models fall within the ambit of the AI Act and must also comply with specific obligations, such as transparency requirements. A distinction is arguably made between ‘standard’ GPAI models and GPAI models that pose a systematic risk (based on their particularly high-impact capabilities).

2. More rigorous obligations apply to providers of GPAI models of systemic risk which are related to cybersecurity protection, necessary model evaluations prior to their placement in the market, as well as adversarial testing.

F. Transparency Requirements

1. Providers of high-risk AI systems shall design and develop them in a manner that ensures their transparent operation. Subsequently, deployers shall obtain information on how the systems work and which data are processed.

2. A fundamental rights impact assessment must be carried out in specific cases (ie deployers that are bodies governed by public law or private entities providing public services as well as deployers of certain high-risk AI systems listed in the AI Act, such as banking or insurance entities). In certain cases, users must also be informed when interacting with an AI system of such an event.

G. Measures in Support of Innovation

1. The AI Act lays down measures to support innovation, with a particular focus on SMEs including start-ups.

2. The set up of one AI regulatory sandbox 2 at national level is provided. Member States are required to ensure that their national competent authorities establish at least one regulatory sandbox (in physical, digital or hybrid form).

3. Participation of start-ups and SMEs in sandboxes is also promoted.

4. Real-world testing of AI systems may also be operated and supervised in the context of the AI regulatory sandbox upon agreement between the national competent authorities and the participants in the AI regulatory sandbox.

H. New EU Regulators Matrix

1. A new supervision landscape is underway for the effective implementation of the AI Act.

2. At a central level and within the European Commission, the EU AI Office has been set up and is responsible for the enforcement of the AI Act across the EU. The Office shall be supported by a scientific panel of independent experts.

3. In parallel, the establishment of the EU AI Board, comprised of representatives from Member States, is intended to assist the European Commission and Member States on achieving a consistent application of the AI Act. An advisory forum for stakeholders will also provide technical expertise to the EU AI Board and the European Commission.

4. At national level, each Member State shall designate at least one notifying authority and at least one market surveillance authority as the national competent authorities for the supervision of AI Act's implementation.

I. Penalties

1. Non-compliance with the AI Act provisions may lead to the imposition of considerable fines, with the highest reaching the greater of €35m or 7% of the total annual worldwide turnover of the previous financial year, depending on the infringement.

2. Fines for the supply of incorrect or misleading information (up to €7.5m or 1% of the total annual worldwide turnover, whichever higher) are also provided.

3. Fines for SMEs are lower.

J. Next Steps

In anticipation of the upcoming final AI Act text going live in the EU’s Official Journal, companies are advised to take the following steps:

1. Conduct a risk assessment by mapping their AI systems as a different set of obligations per AI system apply. Considering that the definition of AI systems under the AI Act is quite broad, the qualification is critical.

2. Review contracts in place with suppliers to assess whether obligations for compliance with the AI Act should be included.

3. Prepare and establish a system of internal governance on the usage of AI systems and assigning roles and responsibilities internally.

Download our Technology & Artificial Intelligence Briefing.

1 Under EU AI Act ‘General-purpose AI model’ means an AI model, including where such an AI model is trained with a large amount of data using self-supervision at scale, that displays significant generality and is capable of competently performing a wide range of distinct tasks regardless of the way the model is placed on the market and that can be integrated into a variety of downstream systems or applications, except AI models that are used for research, development or prototyping activities before they are placed on the market.

2 Under EU AI Act ‘AI regulatory sandbox’ means a controlled framework set up by a competent authority which offers providers or prospective providers of AI systems the possibility to develop, train, validate and test, where appropriate in real-world conditions, an innovative AI system, pursuant to a sandbox plan for a limited time under regulatory supervision.